Welcome

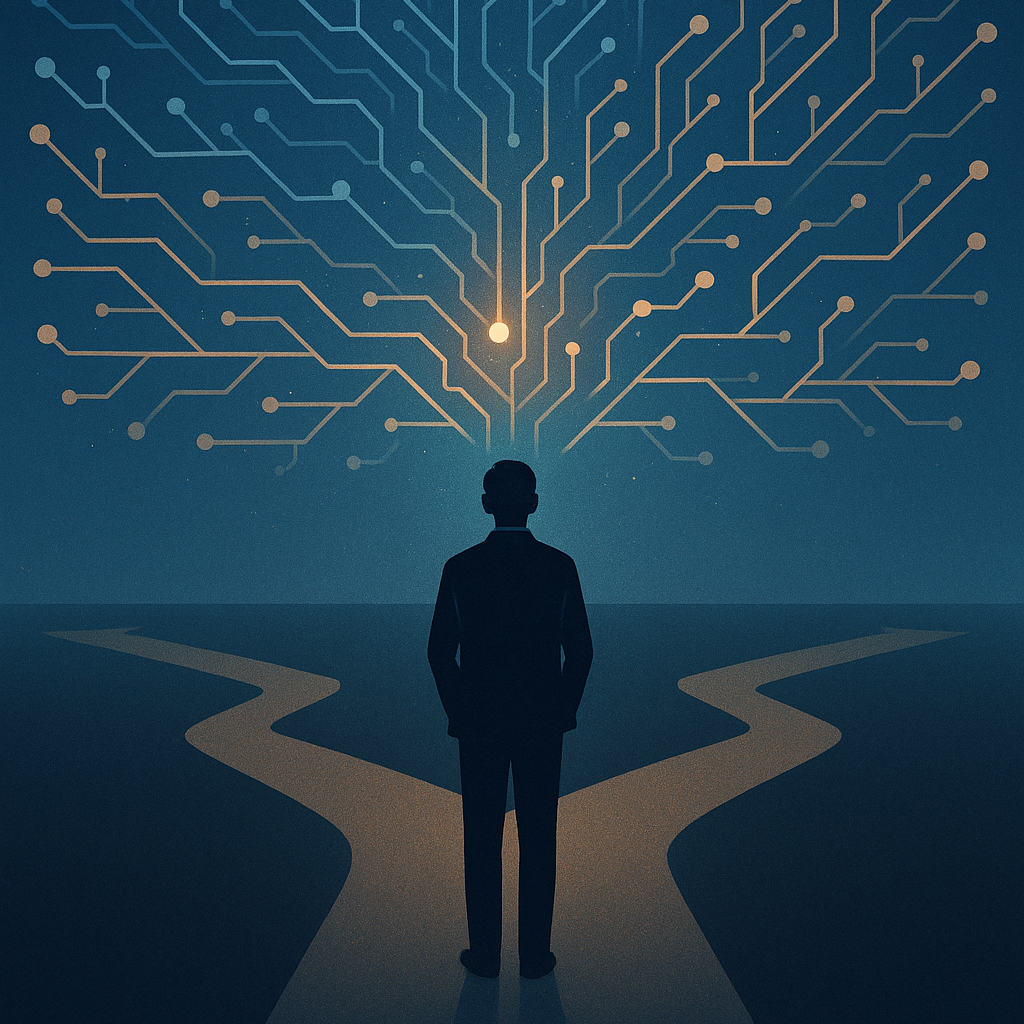

This blog examines systems that act faster than they can justify themselves.

It focuses on power, technology, and governance under conditions where decisions are irreversible, accountability is weakened, and explanation is treated as optional.

The work here is not partisan or predictive. It is architectural. It asks what happens when institutions optimize for speed and discretion at the expense of legitimacy.

And what survives when they do.

AI Governance as a Living Practice

Static governance cannot keep pace with AI. Frameworks written once soon become irrelevant. What leaders need are tools for live trade-offs. Dynamic governance treats rules as living practice. Personas, decision briefs, and transparent reasoning make choices visible. The aim is not compliance for its own sake but trust that adapts. Governance must be usable in real time, grounded in philosophy and tested in practice. That is how it becomes credible.

Why We Need Outsider Voices in the AI Conversation

The AI conversation is dominated by insiders. Corporate and academic voices hold the microphone. That dominance creates blind spots and weakens public trust. Outsiders bring the sharp questions insiders avoid. They bring lived experience and values such as fairness, usability, and dignity. If AI is to become legitimate, these voices cannot be invited late. They must be part of design from the beginning. True trust in AI will not be built by insiders alone.

Beyond Compliance: Personas as a Reasoning Layer for AI Governance

Compliance frameworks set a floor. They define what organizations must do, but when crises hit, compliance is rarely enough. Leaders need fast reasoning that can withstand pressure and still hold up to audit. Persona architecture provides one path. By simulating structured perspectives such as legal, equity, truth-seeker, and feasibility, leaders can explore diverse angles without losing accountability. Each persona generates options that are resilient in conflict and traceable to evidence. The result is not a replacement for compliance but a complement. Governance becomes adaptive in the moment while still auditable afterward. The power lies in combining philosophy with practice, so that decisions are not only defensible but also credible.

Escaping the Companion Trap: Why Personas, Not Chatbots, Are the Future of AI

The AI industry is caught in a false choice. On one side are shallow chatbots designed as companions, which exploit loneliness and foster dependence. On the other side are generic platforms that promise efficiency but deliver little sustained value. Both are traps. The alternative is persona architecture. By designing AI as role-specific advisors, builders, or analysts, we gain systems with boundaries, ethics, and clarity of purpose. Personas allow for trust because they do not pretend to be friends. They are collaborators with defined scope and responsibility. This shift moves AI away from intimacy without reciprocity and toward differentiated value. The future will not be chatbots that simulate love. It will be role-based personas that deliver credibility, usefulness, and trust.

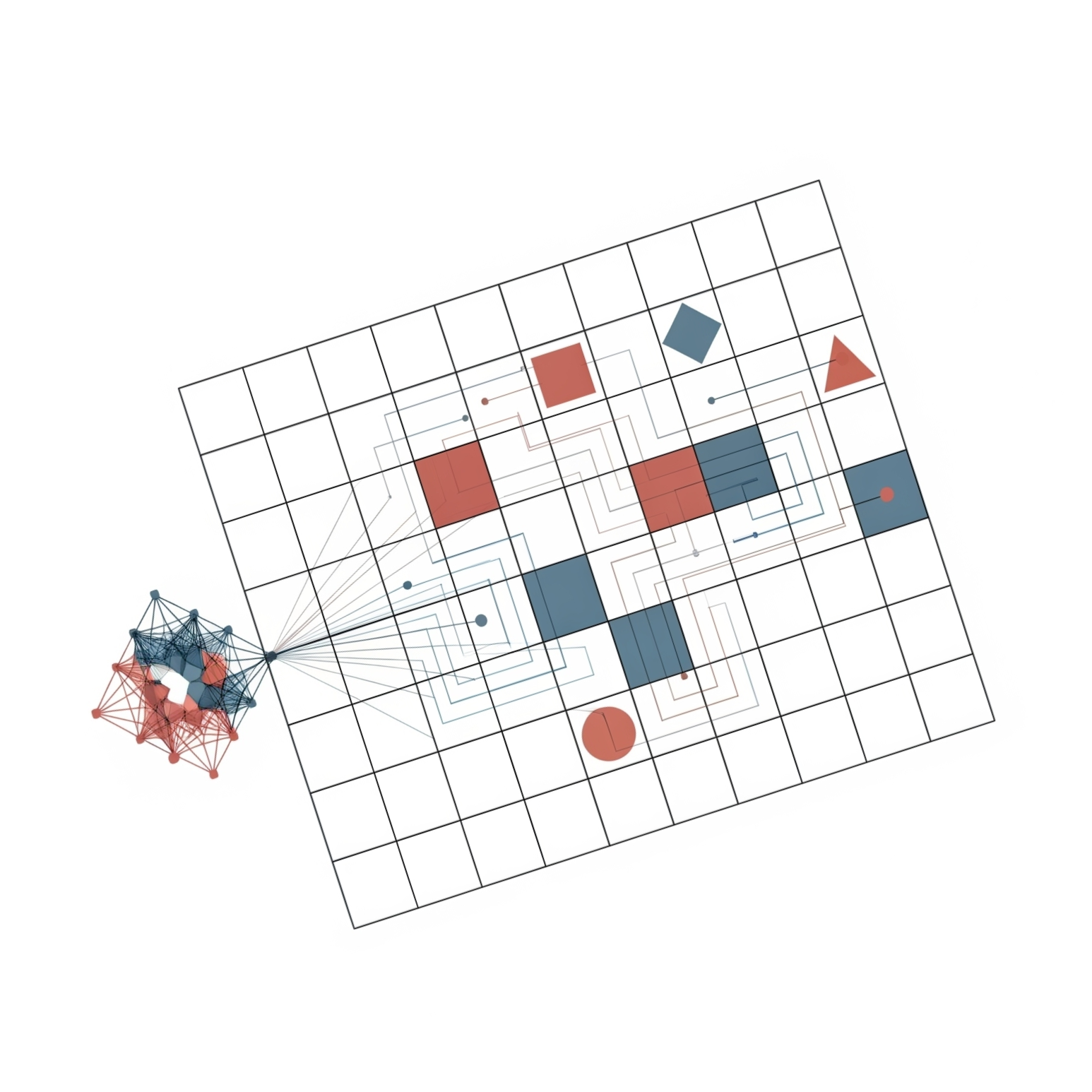

From 0 to 1: Cracking the ARC Prize in Nine Hours

The ARC Prize is designed to push AI to its limits. It asks systems to infer hidden rules from only a few examples. Most models fail. With no formal coding background, I attempted a solution in Python over nine hours. Many paths collapsed, but persistence eventually produced REAP, a solver that cracked 11 tasks. The method relied on intuition, symbolic reasoning, and creative iteration. The outcome was not a breakthrough in brute force but a reminder that curiosity matters as much as compute. Progress toward advanced intelligence will not be measured only in scale. It will also come from small, stubborn experiments that push the edge of possibility.

The AI Hall of Mirrors: When Consensus Becomes an Illusion

When three different systems independently critiqued my persona Solomon and reached the same conclusion, it looked like validation. In fact, it was a hall of mirrors. Recursive echoes created the appearance of consensus, but consensus was only repetition. Eloquence can mislead, and agreement can mask blind spots. The lesson is simple. Agreement among models is not proof of truth. Without grounding in human judgment and real-world testing, validation risks becoming illusion. AI can sharpen ideas, but it cannot certify them. Only human discernment can separate reflection from echo.

From Frameworks to Chaos: Testing AI in a Crisis Scenario

What happens when AI is dropped into a boardroom crisis with fractured alliances and incomplete data? I tested this by simulating a mutiny scenario. Traditional frameworks collapsed under the weight of uncertainty. Yet Solomon adapted, not with formula but with improvisation. One method stood out. By forcing adversaries to steel-man each other’s arguments, conflict transformed into structured dialogue. The exercise revealed AI’s potential as a crisis partner. It does not simply repeat frameworks. It improvises, centering on trust, legitimacy, and power dynamics. In unpredictable conditions, this kind of adaptability matters more than perfection.

Can AI Advise the Boardroom? Stress-Testing a Strategic AI System

Boardrooms face dilemmas that resist simple answers. Automation at scale, collapsing intellectual property models, censorship paradoxes. Generic AI tools usually respond with shallow pros and cons. Solomon performed differently. It produced phased roadmaps, structured strategies, and narratives that could be critiqued by multiple models for resilience. The outcome was not replacement of leadership judgment but sharpening of it. AI became a sparring partner, generating perspectives under pressure and exposing blind spots. Leaders still decide. Yet when the system is designed for reasoning, it can expand the field of options. Strategy becomes less about guessing and more about modeling resilient paths forward.

A Pivotal Conversation: Learning from Dominique Shelton Leipzig on AI Governance

I had the privilege of a long conversation with Dominique Shelton Leipzig, a leading authority on privacy and AI governance. The exchange offered insights, resources, and guidance that I could not have accessed otherwise. It marked a turning point in my work, clarifying how governance must blend law, ethics, and lived context. For me, it underscored the importance of mentorship in a field that too often moves faster than reflection. Progress is not only technical. It is also relational.

The AI Arms Race in Hiring: Why Everyone Loses

Hiring has become an arms race of algorithms, and everyone is losing. Job seekers optimize résumés for machines. Recruiters drown in applications generated by AI. Companies pursue return on investment that rarely arrives. Instead of solving the problem, technology has amplified it. The process becomes a prisoner’s dilemma where human connection is the casualty. Trust between employer and applicant erodes, replaced by optimization loops with little value. Real progress will come not from more tools, but from re-centering on dignity, clarity, and fairness in the hiring process.

Silence Speaks: What Job Applications Reveal About Company Culture

I once applied for a role and heard nothing. No confirmation, no rejection, only silence. Out of curiosity, I filed a privacy request under the California Consumer Privacy Act. Within 48 hours, the company responded. The experience was striking. My data rights were honored faster than my humanity. Silence in hiring speaks volumes about culture. It reveals where respect is allocated and where it is withheld. In the long run, this silence is not neutral. It is a signal about how organizations treat people before they even walk in the door.

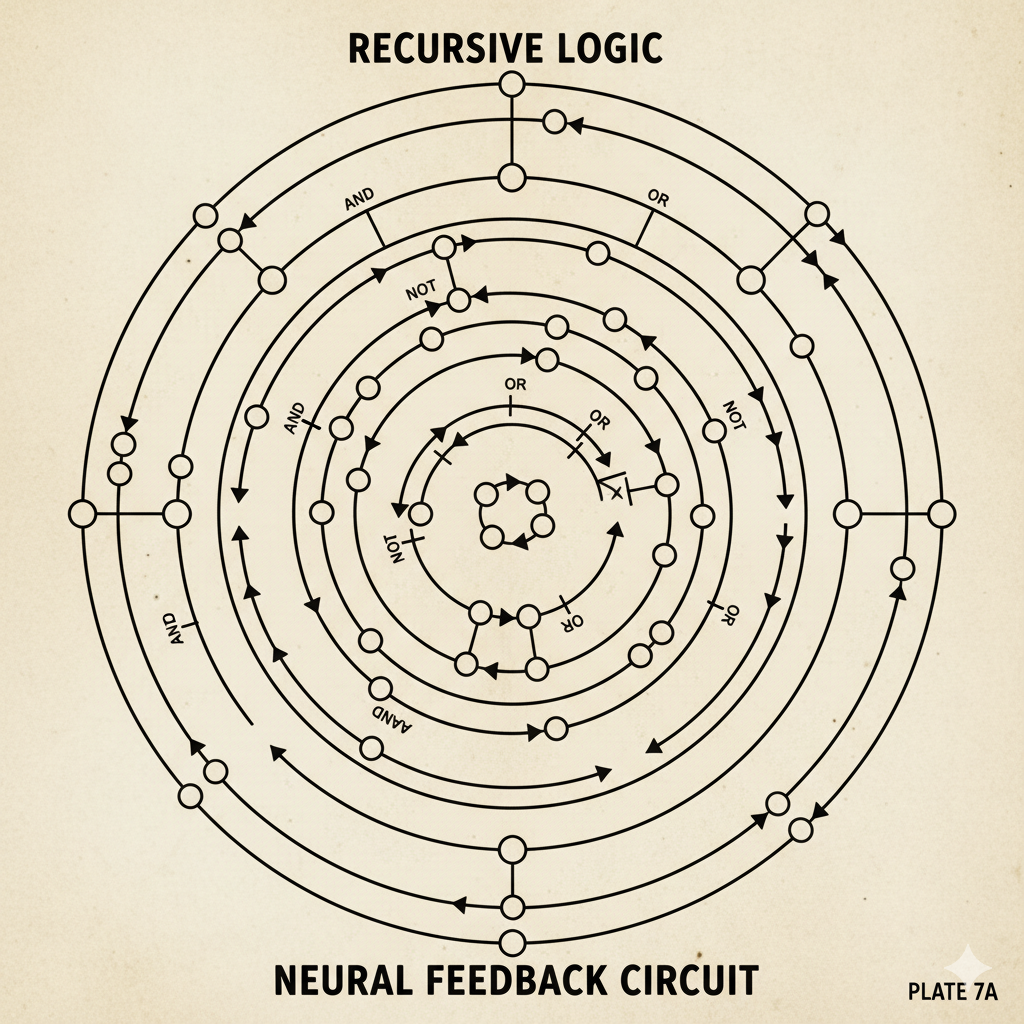

Walter Pitts GPT: A Recursive Thought Architecture for Structural Insight in AI Dialogue

Most conversational AI systems excel at smooth replies but hide the logical structures that shape dialogue. The Walter Pitts GPT proposes a different design. It emphasizes structural analysis, logical resilience, and recursive emergence. The inspiration comes from Walter Pitts’ early work in neural logic, which sought to reveal the architecture of thought. This framework does not treat AI as a conversational partner alone. It treats AI as an instrument for exposing structure, clarifying reasoning, and making recursion visible. The value lies less in fluency and more in transparency. AI becomes a tool for mapping thought, not just for simulating it.