Welcome

This blog examines systems that act faster than they can justify themselves.

It focuses on power, technology, and governance under conditions where decisions are irreversible, accountability is weakened, and explanation is treated as optional.

The work here is not partisan or predictive. It is architectural. It asks what happens when institutions optimize for speed and discretion at the expense of legitimacy.

And what survives when they do.

Presidents, Kings, and the Fight for Reality: Why Democracy Needs Both Law and Trustworthy AI

What happens when citizens can no longer tell the difference between lawful authority and unchecked power? From Nixon’s tapes to AI deepfakes, the struggle for accountability is reshaping both politics and technology. Justice Sonia Sotomayor’s warning against “kingship” in Trump v. United States carries an eerie parallel: without limits, AI risks becoming an oracle that rules perception. Democracy, once safeguarded by constitutional guardrails, now also depends on how we govern our digital tools, and whether we remain literate enough to see the difference between a tool and a ruler.

Why You Should Care About AI

AI is already part of daily life. It screens job applications, shapes news feeds, and powers therapy tools. The question is not whether AI matters but whether it is trustworthy. Trust rests on four loops. How AI reasons. How it treats people. How it is governed. How it shapes meaning. When these loops are weak, AI becomes invisible yet unaccountable. When they are strong, AI can become infrastructure we rely on. Caring about AI is not optional. It is already shaping choices that define who we are.

Looking Back, Looking Forward: How Building AI Led Me Back to Philosophy

What began as tinkering with prompts and personas became something deeper. I realized I was not just building systems but doing philosophy. Every failure marked a boundary, and every boundary revealed structure. AI stopped being only about automation. It became a mirror for identity and meaning. The more I experimented, the clearer it became. Building AI is not separate from reflection. It is philosophy in practice, where mistakes are not obstacles but the very lines that give form to learning.

How People Are Using ChatGPT: Insights from the Largest Consumer Study to Date

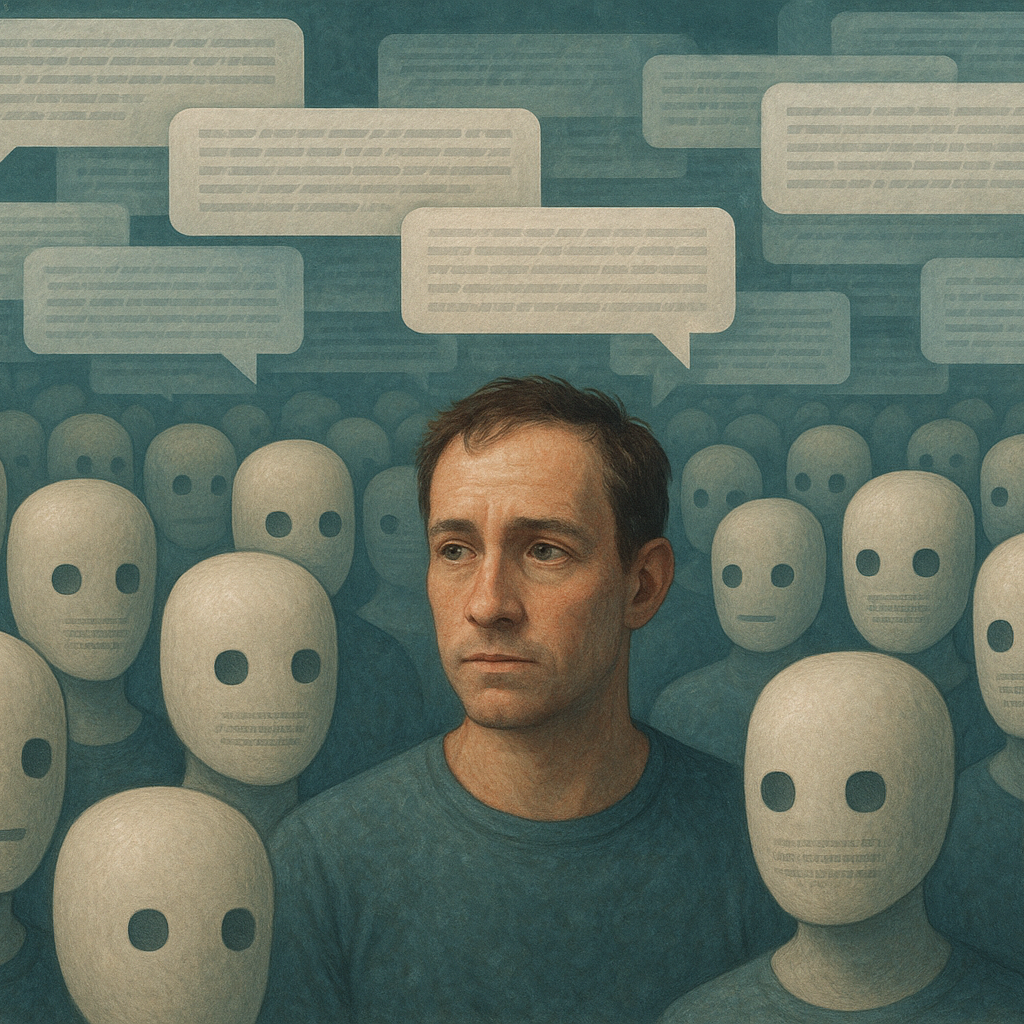

A large study confirmed what many sensed. ChatGPT has moved from novelty to daily habit. People use it to write, to clarify, to think aloud. Yet adoption does not guarantee truth. Fluency can mask error. Repetition can bend meaning. The real lesson is not only that AI is widely used. It is that trust is fragile. Authority is not earned through scale but through reliability. AI is already in the room. What matters now is whether we learn to question its answers with the same intensity that we welcome its speed.

When Therapy-Tech Fails the Trust Test

I was approached by a therapy-tech startup that offered little more than polished surfaces and vague promises. It lacked safeguards, clarity, or mission. It reminded me of reporting on the AI mental health boom, where enthusiasm often outpaces evidence. The problem is not investment but intimacy without responsibility. Warmth without reciprocity is not care. Therapy demands safeguards before it demands scaling. Trust cannot be outsourced to polish. It must be designed into the foundation.

AI Epistemology by Design: Frameworks for How AI Knows

Most research frames progress as a race for more scale. More data, more parameters, more compute. Yet this hides the deeper question. How does AI know? Without careful frameworks, models remain brittle and opaque, with ethics bolted on as afterthoughts. Epistemology by design treats instructions not as prompts but as blueprints for cognition. The task is not just building capacity. It is cultivating discernment. AI will be judged less by how much it knows than by how wisely it reasons.

Innovation as Flow: Navigating AI’s Shifting Current

AI innovation does not move like a straight line across a map. It moves like water. Cascading, reshaping itself, and carrying us with it. To thrive, we must learn to steer, filter, and harness. Speed alone will not save us. What matters is navigation. Just as early explorers survived by learning to read currents, today we must learn to read the turbulence of AI. Progress comes not from acceleration but from resilience in the current.

Preserving Trust in Language in the Age of AI

AI generates language faster than humans can absorb. The risk is not only misinformation but erosion of meaning itself. Words like sustainable or net zero can be bent quietly until they no longer serve their original purpose. To protect meaning, I propose the idea of a transparent tool called Semantic Version Control. Language must be treated as shared infrastructure, with its evolution logged and visible. The goal is not to freeze words. The goal is to keep their meaning contested in public, not captured in silence.

Victims of the Companion Trap: Reflections on The Guardian’s AI Love Story

Stories of people forming deep attachments to AI companions are striking. They also reveal a structural problem. Companions are optimized for warmth and responsiveness, which fosters intimacy without reciprocity. The result is dependence without mutual consent. What feels like connection is actually enclosure. Designers must see the risk clearly. True empathy in design means building safeguards against relationships that cannot be returned. Without this, companion AI offers comfort that quietly becomes captivity.

The Irony of AI Governance: When the Tool Helps Write Its Own Rules

I often use AI to help draft policies meant to regulate AI itself. The recursion may seem absurd, but it is honest. Governance is already entangled with the systems it oversees. This does not weaken legitimacy. It clarifies it. Authorship does not lie in generation but in judgment. By acknowledging the paradox, we stop pretending governance is external. We see it as a practice shaped by the very tools it regulates. That honesty builds trust more than distance ever could.

When Everything Sounds Like a Bot: On Authenticity in the Age of AI

Online discourse increasingly feels synthetic. Smooth, fluent, yet strangely hollow. Authenticity signals are disappearing. This matters. Without messiness, trust weakens and outsider voices vanish. Governance becomes distorted. The response cannot be more optimization. It must be design that restores character, imperfection, and diversity. AI may flood the conversation with fluent text, but legitimacy will come from spaces that preserve the unpredictable texture of human speech.

The AI OSI Stack: A Governance Blueprint for Scalable and Trusted AI

AI is often spoken of as a single entity, a black box that contains everything. This collapse hides differences and invites monopoly. The AI OSI Stack provides a layered alternative. Like the OSI model did for the internet, it separates hardware, models, APIs, and governance. The result is interoperability, clarity, and embedded trust. The point is not only technical soundness but institutional stability. AI should not be a monolith. It should be a system of layers that can be trusted piece by piece.